09. June 2024

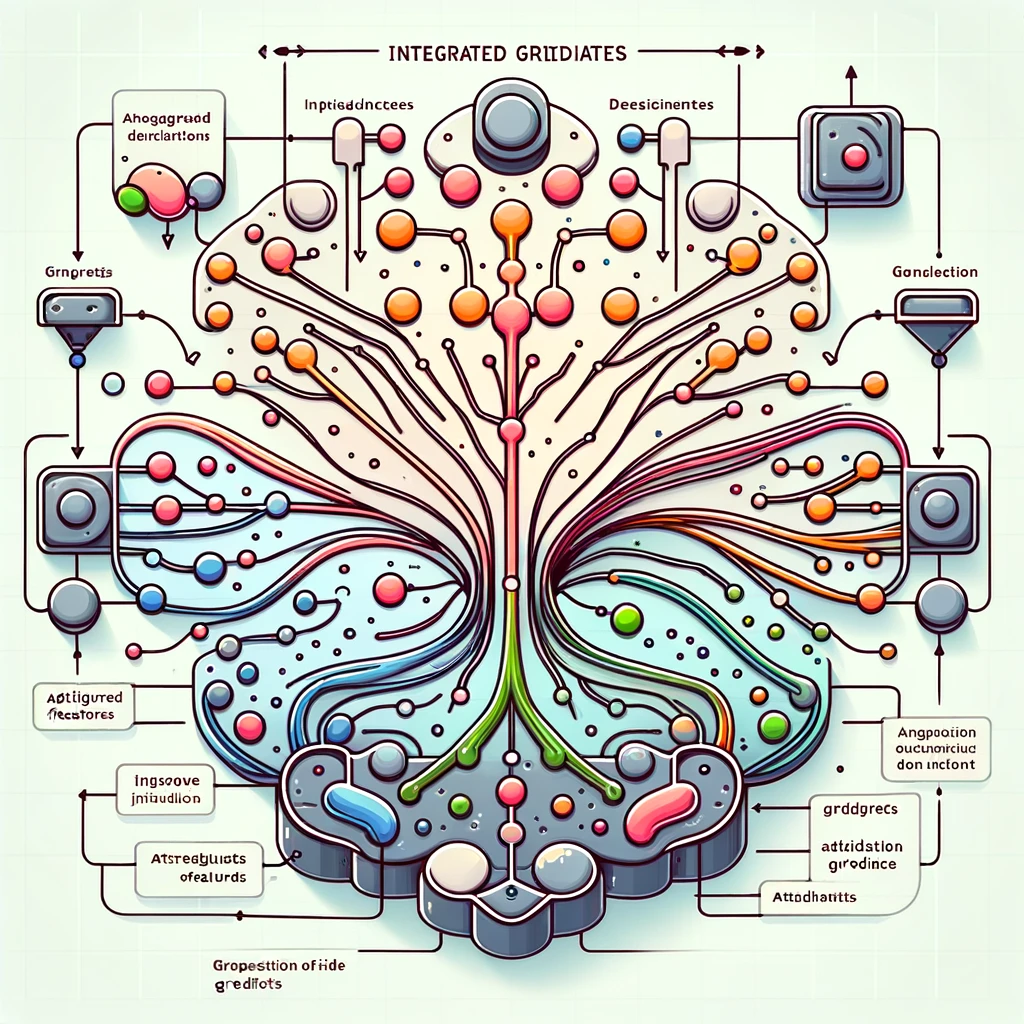

Integrated gradients

Interpreting black box of deep neural networks

Introduction

In the realm of artificial intelligence and machine learning, interpretability has become an essential aspect, especially for deep learning models, which often operate as black boxes. Integrated Gradients is one of the prominent methods developed to address the need for interpretability.

What are Integrated Gradients?

Integrated gradients is a technique for attributing the prediction of a neural network to its input features. It is designed to be used with differentiable models, particularly neural networks, to understand how each input feature contributes to the output prediction. The method was proposed by Mukund Sundararajan, Ankur Taly and Qiqi Yan in their 2017 paper, "Axiomatic Attribution for Deep Networks".

The Concept Behind Integrated Gradients

Integrated gradients aims to attribute a model's prediction to its input features in a way that satisfies certain axioms. These axioms include:

✔ Sensitivity

If an input feature changes and this change alters the prediction, the attribution should reflect this change.

✔ Implementation Invariance

Two models that are functionally equivalent should produce the same attributions for the same input.

To achieve these goals, integrated gradients computes the integral of gradients of the model’s output with respect to the input along a straight path from a baseline input to the actual input.

Methodology

The methodology of integrated gradients can be broken down into the following steps:

Baseline Selection

The choice of baseline input is crucial for the effectiveness of integrated gradients. The baseline is typically a neutral input such as a zero vector or an input where all features are set to their mean or median values. Selecting an appropriate baseline can significantly influence the resulting attributions, making it an important consideration in the application of this method.

Path Integration

Consider a straight-line path from the baseline to the input. Mathematically, this can be represented as:

\[ x' = \alpha x + (1 - \alpha) x_{\text{baseline}}, \alpha \in [0, 1] \] where \(x\) is the actual input and \(x_{\text{baseline}}\) is the baseline input.Gradient Computation

For each point along the path defined by the above equation, the gradient of the model's output with respect to the input is computed. These gradients provide insights into how sensitive the model's output is to changes in each input feature at different points along the path.

Integral Calculation

Integrate these gradients over the interval from 0 to 1. The integrated gradient for each input feature \(i\) is given by:

\[ IG_i(x) = (x_i - x_{\text{baseline},i}) \int_{0}^{1} \frac{\partial F(x'(\alpha))}{\partial x_i} d\alpha \] where \(F\) is the model's output function. This integral essentially accumulates the contributions of each input feature to the model's output along the specified path.

Computational Considerations

Efficiency

While the concept of Integrated Gradients is theoretically sound, the actual computation can be resource-intensive, especially for models with a large number of parameters. Efficient computation techniques, such as numerical integration and sampling methods, are often employed to make this process feasible.

Numerical Integration

Numerical integration techniques like the trapezoidal rule or Simpson's rule can be used to approximate the integral. These methods involve discretizing the path into a finite number of steps and summing the gradients at these points to estimate the total integral.

Sampling Methods

In practice, a common approach is to sample points along the path rather than compute the gradient continuously. For example, one might choose \(m\) evenly spaced points along the path from \(\alpha=0\) to \(\alpha=1\) and compute the gradient at these points. The integral is then approximated by averaging these gradients and scaling by the input difference:

\[ IG_i(x) \approx (x_i - x_{\text{baseline},i}) / m \sum_{j=1}^{m} \frac{\partial F(x'(\alpha_j))}{\partial x_i} \] where \(\alpha_j\) are the sampled points along the path.

Practical Implementation

✔ Libraries and Tools

Several machine learning libraries provide implementations of integrated gradients, making it easier to apply this technique in practice. For example:

TensorFlow

The TensorFlow library includes a module for computing integrated gradients.

Captum

An interpretability library for PyTorch, Captum provides a comprehensive implementation of integrated gradients along with other attribution methods.

✔ Use Cases

Integrated gradients can be used in various scenarios, such as:

Feature Importance

Understanding which features are most influential in the model's predictions.

Model Debugging

Identifying and correcting issues related to model behavior and biases.

Transparency

Providing insights into model decisions for stakeholders who require interpretability.

By integrating these specific technique elements, you can leverage integrated gradients to enhance the interpretability of deep learning models effectively.

Advantages and Limitations

✔ Advantages

Theoretical Soundness

Integrated gradients is grounded in well-defined axioms, providing a robust theoretical basis for attribution.

Implementation Invariance Ensures consistent attributions across different implementations of the same model.

✔ Limitations:

Baseline Dependence

The choice of baseline can significantly affect the attributions.

Computational Cost

Calculating the integral can be computationally expensive, especially for large models.

References

- (Article) Axiomatic Attribution for Deep Networks, Mukund Sundararajan, Ankur Taly, Qiqi Yan | Website

- (Article) Enhanced Integrated Gradients: improving interpretability of deep learning models using splicing codes as a case study, Jha, A., K. Aicher, J., R. Gazzara | Website

- (Article) Guided Integrated Gradients: an Adaptive Path Method for Removing Noise, A. Kapishnikov, S. Venugopalan, B. Avci, B. Wedin, M. Terry and T. Bolukbasi | Website

- (Article) A Rigorous Study of Integrated Gradients Method and Extensions to Internal Neuron Attributions, Daniel Lundstrom, Tianjian Huang, Meisam Razaviyayn | Website